Background

Background

This project centers on component tracking and traceability across the entire production line using sensor fusion, computer vision, and robotic and deep learning-based technologies, improving accuracy and lowering cost. The approach is to build a sensor fusion package that will scan factory space and build an accurate image and 3D volumetric map of the space and surfaces in multi-spectra modalities. Additionally, the technology will be designed for flexible integration with existing PLM and factory automation systems, allowing for widespread seamless adoption.

Objective

Create a system that will improve component tracking and traceability using an open and modular design for flexible configuration within existing PLM and factory automation systems.

Technical Approach

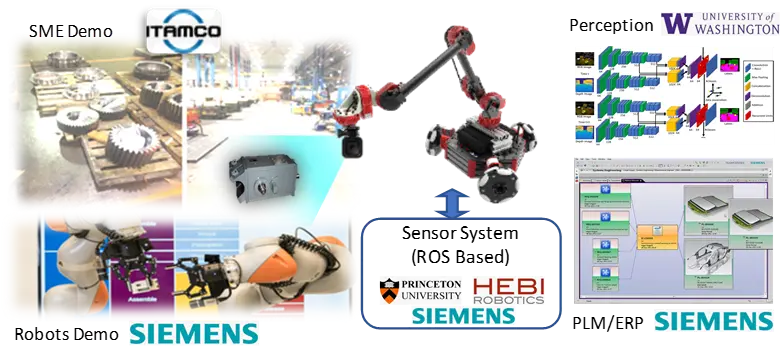

The sensor fusion package scans the factory floor to build an accurate images and 3D volumetric map of the space and surfaces. Deep learning-based vision and perception algorithms extracts the relevant parts from the information-rich sensor data. A mobile robotic sensory platform extends the sensor’s range and coverage in complex factory environments. Tracking algorithms then merge the sensor data with the process model, improving accuracy and enabling traceability. Additionally, the project centers on an open and modular design for flexible configuration and seamless integration with existing PLM systems.

Impact

The team developed a novel system and method for component track and traceability via sensor fusion and digital twin technologies, resulting in several new patents. The final demonstration showcased the system’s CAD-based perception algorithm on semantic segmentation, accurate robot perception of its own location, and the location of the parts identified and tracked, repeatable robot navigation, global/ local path planning, and obstacle avoiding, and tracking of part location as they move based on perception and logic. The system is composed of several technology nodes which will be useful to the team and ARM Members as a system and individually: object detection using alternative spectral sensing, object detection with localization and recalibration, CAD-based object detection pipeline, robot perception and control, and the tailored sensor package design.

The industrial partner, ITAMCO, estimated significant return on investment for this technology with a payback period of only 6 months and a 5-year ROI of more than $300K for one deployed robot.

Participants

Siemens, HEBI Robotics, ITAMCO, Princeton University, University of Washington